Retry scoring translations

Once your model is trained, the test sets from the Dataset(s) you used are translated with your model and with its parent model. Each of these translations are compared to their provided reference. The result of the comparison is a BLEU score measuring the quality of the translation. If for some reason the process failed during the training of your model, you can try to launch it again.

There are two ways to relaunch the scoring process.

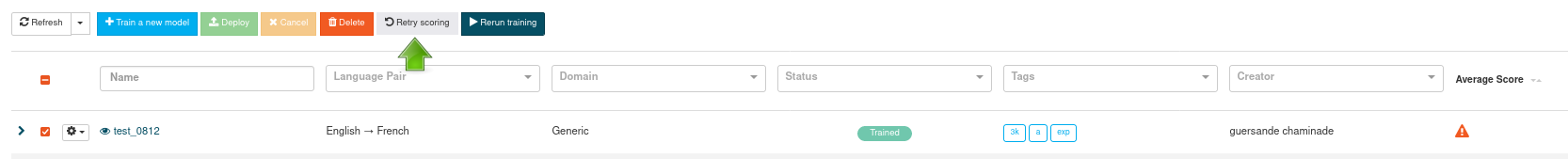

Tick the box next to the model, then click on the Retry scoring button:

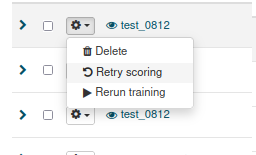

Click on the cog next to the model, and choose Retry scoring from the drop-down menu:

Note

You can retry scoring only models for which the scoring initially failed. For any other purpose, please launch an Evaluation.

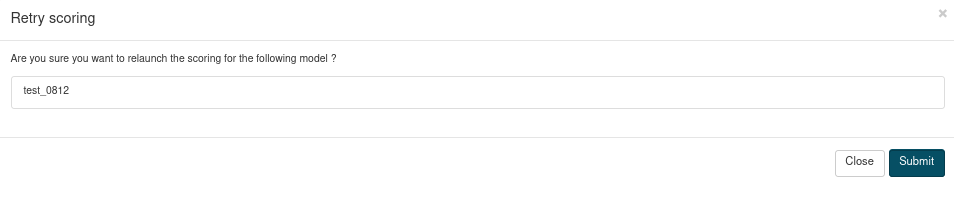

Click on Submit to confirm.

Clicking on Close or on the X in the top right corner will close this confirmation window and the scoring will not be launched.

It is possible to retry scoring for several models at once.